The growing use of 3D in immersive retail, robotics, and digital twins has revealed a fundamental constraint: delivering high-fidelity 3D content to millions of users economically requires continuous streaming, not download-and-load architectures.

The industry has optimized 3D representations extensively (from polygon meshes to gaussian splats to neural radiance fields) but the delivery model has remained largely unchanged. Users download complete datasets before interaction begins. Developers optimize assets for specific platforms and compile them into fixed binaries. Infrastructure provisions for peak capacity rather than adapting to demand.

This download paradigm creates consistent bottlenecks regardless of the underlying representation format. Users wait through installations and asset downloads before they can interact with experiences. Switching between experiences means repeating the cycle. Development timelines stretch as teams optimize for each target platform.

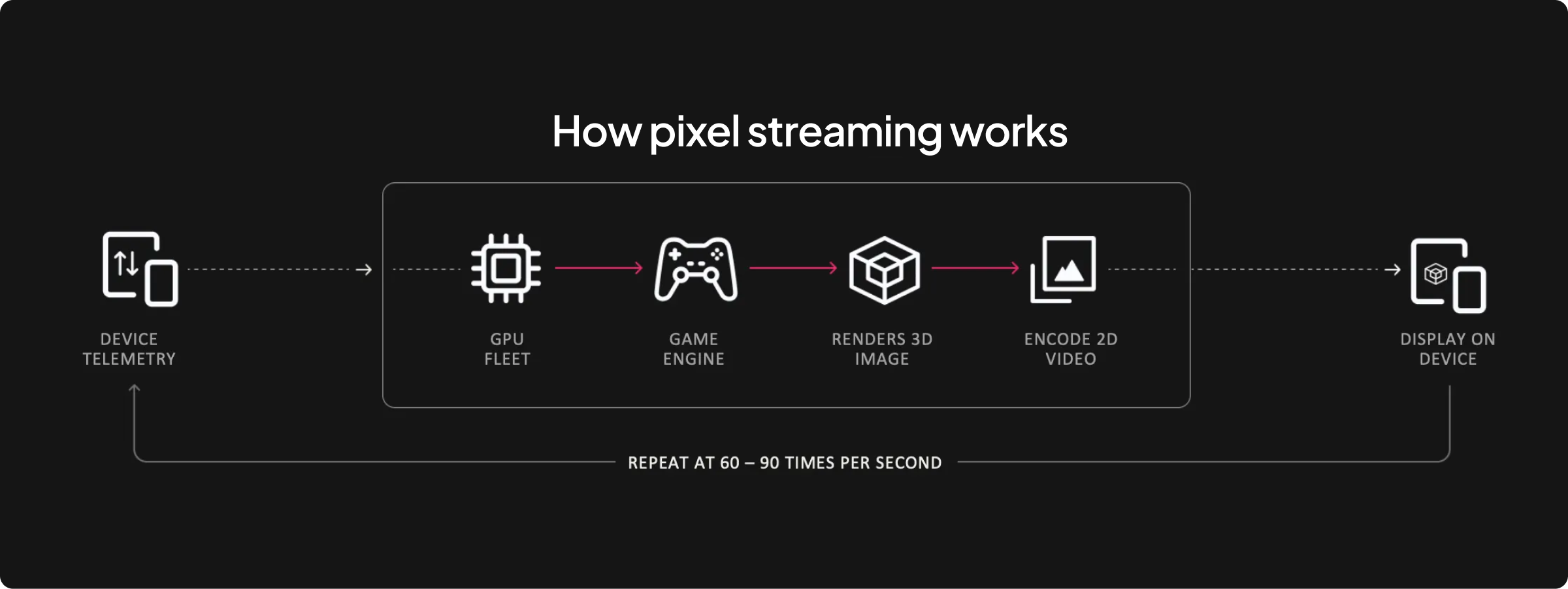

Pixel streaming solutions like NVIDIA’s CloudXR or Meta’s Horizon Hyperscape attempts to eliminate downloads by rendering scenes on cloud GPUs and transmitting video feeds. But this approach introduces different constraints: expensive and scarce GPU infrastructure, fixed capacity limits for concurrent users, and latency sensitivity that degrades experience quality across distance.

The fundamental issue isn’t which 3D representation to use, it’s that neither download-based nor pixel-streaming architectures were designed to deliver high-fidelity 3D content at internet scale.

Cost: Edge GPU infrastructure is expensive and scarce. Pixel streaming a single photorealistic scene can require high-end GPUs positioned within milliseconds of users, an economically unsustainable model for million-user distribution. When delivering 3D content to browsers directly, getting high-fidelity 3D content into web compliant 3D formats such as glTF (GL Transmission Format) takes time and sacrifices visual fidelity.

Scale: Cloud GPUs have capacity constraints. Each active pixel stream needs its own allocation of GPU resources, which makes it difficult to scale GPU resources when many users connect simultaneously.

Speed: Users face friction in every distribution channel today. Native applications require browsing an app store, downloading large file sizes, waiting for installation, and launching, all before interaction begins. Web-based experiences eliminate installation but still require downloading complete assets upfront. To keep load times acceptable, developers often reduce visual fidelity significantly, trading quality for faster downloads. Switching between experiences means repeating this cycle. Development cycles stretch 6–18 months as teams optimize and compress assets for each target platform and distribution channel.

Miris fundamentally reimagines the delivery stack. Traditional approaches force a choice between streaming pixels from cloud GPUs, baking 3D assets into downloadable applications, or delivering low-fidelity 3D in web browsers. Miris eliminates this tradeoff by streaming actual 3D spatial data, geometry, appearance, and material properties, as a continuous, adaptive process that reconstructs on the client device.

This architectural distinction changes how 3D content can be created, delivered, and consumed at scale.

Traditional 3D delivery works like video before adaptive streaming existed, you had to download the entire file before playback could begin, at whatever resolution was baked in. If you wanted higher quality, you downloaded a different, larger file. This same constraint applies to 3D today: download complete geometry and texture sets at fixed fidelity, wait for the transfer to complete, then interact.

Miris applies the same paradigm shift to 3D that adaptive streaming brought to video. Instead of downloading complete assets at fixed quality, we transmit a highly optimized spatial representation that progressively refines its level of detail in real-time. The experience starts immediately at reduced fidelity, then continuously enhances as more data arrives, prioritizing the detail that matters most to what you’re viewing at any given moment.

Think of how video plays on YouTube or Netflix: it starts instantly, sometimes looking blocky initially, then progressively sharpens as the stream adapts to your bandwidth and the content you’re watching. Miris does the same thing for navigable 3D space. Your device receives spatial data that can be rendered immediately and refined progressively, adapting to your network conditions, hardware capabilities, and viewing focus.

1. AI-Driven Adaptive Fidelity

Miris’ streaming protocol doesn’t just adapt bitrate like video streaming, it adapts the underlying spatial representation in real-time based on multi-dimensional optimization across:

Miris automatically optimizes what data to send and how to send it, adapting moment-by-moment to keep experiences fluid even when streaming across continents. Pixel streaming fails at these distances due to round-trip latency, but Miris’ architecture eliminates that constraint and enables experiences with virtually no perceived latency. This delivers immediate interactivity that progressively enhances, rather than forcing users to wait for complete downloads.

2. Resolution-Independent Progressive Refinement

Field-based representations are naturally compatible with progressive enhancement. Initial samples arrive quickly, providing immediate interactivity at reduced fidelity. As additional data streams in, the client progressively refines the representation, exactly like adaptive video streams, but for navigable 3D space.

This matches the consumption pattern of unreliable consumer networks far better than mesh-based approaches, which typically require complete topology transmission before any rendering can occur.

3. Serverless, Hardware-Independent Architecture

Because Miris prepares and transmits 3D spatial field data rather than rendered pixels, our architecture operates without GPU or CPU server dependencies when distributing content to users. Miris uses GPUs upfront to condition assets for streaming. When it’s time to deliver the content to consumers, there’s no need for cloud GPUs, specialized rendering hardware, or server provisioning.

This eliminates infrastructure capacity constraints entirely. Streaming costs drop by orders of magnitude, and our serverless model behaves like a CDN, delivering content efficiently from optimal locations while Miris manages the distribution infrastructure seamlessly. The economic model shifts from hardware-constrained to bandwidth-constrained, which scales far more favorably. Costs scale linearly with usage, not exponentially with concurrent users. Like video CDNs, frequently accessed spatial data caches at edge locations, further reducing delivery costs and latency as content reaches larger audiences.

But architecture alone isn’t enough. To fully realize these benefits, streamed 3D content must integrate seamlessly with existing workflows and applications.

By eliminating per user GPU infrastructure dependencies, enabling instant content switching, and delivering adaptive fidelity across any network condition, Miris transforms the economics and user experience of 3D content delivery. This unlocks new possibilities across industries that have been constrained by traditional approaches:

Miris is redefining 3D content delivery. By embracing modern graphics technologies, eliminating per-user GPU infrastructure dependencies, and building adaptive streaming intelligence into the protocol itself, we’ve addressed the cost-scale-speed constraints that have limited 3D distribution for decades.

Content that previously took weeks to prepare and distribute now deploys in hours. Infrastructure costs scale with actual bandwidth usage rather than provisioned GPU capacity. Experiences that required multi-gigabyte downloads and device-specific builds now run instantly on any device. Build once for high-fidelity quality and deliver it everywhere, adapting to whatever device users have.

The infrastructure to make 3D content as ubiquitous as video already exists. This is what Miris has been working on for years, and we’re really proud of what we’re building.

Interested in bringing true spatial streaming to your platform? Connect with our team to explore how Miris can transform your 3D delivery pipeline.